Fig. 1 Header.

Abstract

Through the modification of pixels, we are altering our memories, which in effect changes our identity. Our memory is a complicated system, it defines who we are and in return, we define which memory is recalled upon. Framed images, like a photo, serve their purpose as a subjective memory recollection tool. These memories are a representation of who we are and form the basis for possible growth. By creating new memories we have an opportunity to rescript our identity. Using the technique of deepfake, which uses a deep learning algorithm to transform your face onto another person, could make you experience a memory that isn’t yours, yet. Deepfake is a perfect tool to see ourselves from an unseen 3rd person perspective. This perspective, when used in traumatic events, could lead to an objective observation opportunity to better understand what is going on. When synthesized into a compelling video of becoming familiar with your negative event, could manifest itself as a new memory and therefore creating a new identity. Thus I believe that deepfakes could be a tool for empowering, in contradiction to the current practice.

Fig. 2 Zeilen.

The other day I got a message from my uncle, he told me to take a look at a video and asked if I remembered it. He was referring to a video of me, my brother and my nephew, his son, in which we were on an occasional weekend trip. In the video, we were jumping off a small sailing boat which was our home for a couple of days. Yes, those days bring back memories, but those memories feel somewhat implied. I couldn’t see myself swimming in the water from my perspective. I was now looking at these images like a stranger of some sorts. My memories became entangled with the images from the video my uncle sent me. I know it’s me I’m seeing, but somehow it feels like a different life, it’s like I’m watching a film and someone keeps telling me, yes that’s you, that someone being my inner self. If my uncle didn’t show me that video would I be able to internalise my memories? This video, that captured a part of my life, now becomes the main attraction of that weekend, it took all the internal memories of that weekend and executed a select, copy, paste, replace all? Yes! command.

Introduction

I am fascinated by how we become the person we are today. We collect memories throughout our lives and build upon these moments of identity formation. What happens if I can fabricate memories that shape us into a version we want to be? Through the use of upcoming digital modifying techniques, such as deep learning algorithms and face transformation tools, I want to research what the effects are of exposing yourself to a rescripted memory embedding something you haven’t done before. I want to use these tools because they have been a part of a dystopian future and I’m confident that it isn’t the tool but the user’s responsibility to create a desirable outcome. Numerous people experience anxieties that limit their ability to fully live their lives. I think that it’s important that we use technology to better our world and assist our fellow people. My main research question is: what are the possibilities and benefits of creating new memories through the use of deepfakes? In this document, I will first look into the making of memories through imagery. Consequently, delve into the visual culture and social media around digital manipulation. I will try to share the concerns around deepfake but also try to highlight the possibilities. Continuing on the construction, altering and witnessing of memories. And because, I am fascinated by film, some inspiring scenes and themes. The approach of my research will be primarily based on anecdotal theorization, an opposition in terms. But by using the anecdote I can observe through a window onto reality. This is a useful approach to better understand the effect of perceiving, which is already from the “I” perspective.

Capturing

When photography came into ordinary life it was mainly used to capture life as it is. Photos and likewise videos are made by exposing a chemical layer to light which transports the reality onto film. “The photograph was not only thought to be visually truthful; it was believed to be scientifically correct” (Schwartz 23). It was seen as a revolution in creating a true representation of life. In comparison to the previous techniques like paintings and illustrations, in which your image was created by the vision of the artist. Photography was a tool to create the closest thing to the truth. Nonetheless more like a tool of temporary truth. Everything before and after isn’t recorded, like with making a film, when the director says cut reality steps back into life. As Susan Sontag describes in her essay “A way of certifying experience, taking photographs is also a way of refusing it, by limiting experience to a search for the photogenic, by converting experience into an image, a souvenir” (9). Photos are like limited, flat, square time machines for our mind. It is all a momentary capture of time, like a memory. It brings you back to a particular event. Ever tried to think beyond the photo-memory, what happened next? I find it hard.

Unlike chemically made photographs, film nowadays is made with cameras that use sensors to translate reality into an objective digital representation of whatever is in front of it. When a human’s face is captured it is technically nothing more than hundreds of pixels, little coloured dots, organised into an image that we instinctively recognise as another human. This new method of photography is prone to manipulation. I remember my first experience with altering a photo. It was in 2004, I made a photo of myself to send via MSN, the social communication app back in the day but on the computer. I noticed I had a zit on my face, I opened the image in Microsoft Paint, got my brush tool and turned the red pixel into my skin colour, done. Rearranging pixels and altering content becomes easier every day, with the right tools anyone can do it. Images aren’t an objective truth, they have always been a subjective truth. They are made through the eyes of the creator, captured by the sensor of a camera. Not showing the horror or compassion in a photo can change the message significantly. The photographer chooses what you can and can not see. And then again when a publisher chooses to crop an image in a fashionable style, the image deviates again from a certain perspective. In current times altering one’s facial characteristics is just another step in perspective formation. Therefore the altering of an image digitally could be seen as a natural evolution of the subjective perspective.

Fig. 3 Pixels.

Memorization

Photographs used to be the closest representation of reality to be stored as a collection of memories. When someone captures your image, you exist. There is proof of your existence and you can witness this proof again any moment in time. Pavel Buchler explains, photographs “keep under constant tension the fragile links between the residue of lived moments and memory, between where we have been and who we are (what we are always becoming)” (105). Memories define who we are. Nonetheless, we are in a struggle with them. Going back and forth through our memories enlists the purpose for constantly re-evaluating our identity thus without those memories we have nothing to adhere to. In the movie, Blade Runner 2049 by Denis Villeneuve, replicants, which are humanoid beings, are given constructed memories from lives not lived. “…if you have authentic memories, you have real human responses…” tells the Memory Maker to K (1:17:50-1:19:25). Memories give the replicants stability, identity and humanity, those memories build their character. We identify with memories that are ours. Therefore when we start to modify or construct new memories we can change our identity.

Fig. 4 Blade Runner 2049.

When you post a selfie on social media it is usually a representation of how you perceive yourself. Like I altered my photo to appear better, so do many people today. When you post a manipulated photo with the intent for it to be real, you are contributing to a constructed identity. For this example, I will take a close relative of mine. When she goes out and a picture is taken from her, she will ask for the image so she can alter it to her liking, not having a double chin is a part of her established social identity now. The photo is not a true memory of reality anymore but a constructed tool for the communication of her idealised identity. On the other hand, contributing false memories to the world wide web isn’t any different than contributing at all, since everything is subjectively orchestrated, from a certain point of view. It is a difficult time to be on the internet and form grounded opinions, the malleability of images but also videos are getting easier every day with new tools to change our growing collective memory. Living in these post-truth times comes with its challenges.

Fake News

Video manipulation is becoming a standard on social media, from the creation of memes to fake news. The ability to rapidly create fake content has been a key driver in creating fake news. What is it that makes fake news so terrifying? The ability to edit and alter content will already provide a different message than the original video was intended for. The power of fake news lies especially in the spreading of the message and social media platforms are like a petri dish for them. Belief begins to form when fellow peers and like-minded people boast and share the same story. Like Yuval Harari spoke about in his book Sapiens, that we have conquered the world and joined forces because we are so susceptible to the inventing, convincing and believing of stories. The real problem arises when the collective memory of false events will become adapted to reality. For example, when a video of Nancy Pelosi was slowed down to create the effect of being slurred or drunk, President Trump shared and retweeted the video which was approximately 6.37 million times viewed (Ajder et al. 11). Taking into account that the video has been identified as false, you could argue that in 5 years from now people will still remember her as the slurring lady. This is also the case with the phenomenon called the Mandela effect. When you asked a group of people: what does Darth Vader say to Luke in the Empires Strikes Back? You’ll have two parties, one that will say: “Luke, I am your father” and the other party will say “No, I am your father”, the latter one is right. Here’s one reason why this split in collective memory could have occurred. When like-minded people come together and talk about their favourite lines from the movie and someone brings up the Luke version, and a lot of people like it and share it with their fellow peers and so on, eventually, you will have a bifurcation of the actual film. If this continues long enough people will defend their version, up to the very point someone will claim that our reality has split in two, some of us come from version one and the others are from version two. This also happened with the death of Mandela, some believe he died in prison while most of us recall reality as it is. Hence the Mandela effect (Ratner). Fake news in itself isn’t that terrifying, it’s what we do with it. Manipulation of videos doesn’t end with the produced content, through distributing fake news we are tearing down reality and creating a new collective memory.

Deepfake

Ever seen a video by Bad Lip Reading? This YouTube channel alters existing content into its own narrative. For example, he dubbed Trump and others in the State of the Union conference, creating a dimwitted demeanour of POTUS, and accordingly contributing to an inaccurate portrayal of the people in question, nonetheless, it is freaking hilarious. Fake videos are a great creative outlet for several channels on YouTube, my first encounter with another creative digital technique was about a year ago. With these techniques, they transformed the face of one person to another. This technique was dubbed to deepfakes from the words deep-learning (machine-based artificial neural network with representational learning) and fake. Deepfake creators on YouTube are mainly focusing on creating entertainment. The deepfake creator Ctrl Shift Face with the largest subscriber base on YouTube, made a video of Bill Hader attending a talk show, while Bill is impersonating celebrities like Arnold Schwarzenegger his face transforms into him. These videos are becoming better every week. It is getting harder to spot the fakes from the originals. Fortunately, these videos are made for educational and entertainment purposes, while a large portion of creators use deepfakes for rather unethical means.

Fig. 5 State of the Union 2020.

Fig. 6 Bill Hader impersonates Arnold Schwarzenegger.

There are logical reasons for the disapproval and fear of deepfakes. The Deepfake creators on YouTube are primarily focusing on the swapping of male actors from movie to movie. While Porn deepfakes are almost entirely created using female subjects. As with a lot of new visually technological discoveries, the porn industry is on top of it. In late 2019 a total of 96% of all the deepfake videos where pornographically related, with a total of over 14.000 videos almost double to the year before (Ajder et al. 1). Deepfakes are usually created to resemble celebrities, this is because there is already so much material to use as data. They can be very harmful to the person in question, like Noelle Martin, who is now fighting these types of abuse. This is her reply on seeing a deepfake of herself:

The video took a moment to load before an 11-second clip began playing in front of me. It was of a woman on top of a man, having sex with him, her whole naked body was visible, her eyes looked straight into the camera as her body moved and her face reacted to the activity. Except it wasn’t the face of a stranger, it was my face […] I watched as my eyes connected with the camera, as my own mouth moved. It was convincing, even to me. Why would people ever think it wasn’t real? Anyone could have seen it – colleagues, friends, friends of friends. I slammed my laptop shut and sat in stunned silence (Scott).

Obviously working on deep learning algorithms isn’t inherently bad and consuming pornographic content neither, but seeing yourself doing something you haven’t done is very unsettling, especially when you haven’t given them permission. The fear of fake audiovisual content is already relevant in our society but with upcoming techniques like deepfakes, it has become even more unbearable to think about. Actions are taken by numerous social media and porn channels like PornHub — one of the best-known porn websites there is — has been the banning of deepfake content since the government failed to do something about it (Brown). We are moving faster towards a post-truth era, and trying to stop this isn’t really working. We need to understand the full spectrum of audiovisual manipulation and create our own tools to deal with the collapse of reality.

Like in previous somewhat dystopian examples, deepfakes can be very harmful. While some deepfakes are so superficial that it can create a comedic effect with funny replacements of actors on actors. I think these fakes cover only a small part of the possibilities this technique could play a part in. If we take the current issue like the Covid-19 virus into account, where certain actors are not able to travel anymore or feel not safe being on set, a stunt-actor or possible a deep-actor could play the part. This would mean that the face alone is being sold as a product to increase the value of the content. Something that has been shown too in the movie The Congress where actor Robin Wright sells her image for movies she isn’t willing to do anymore. This would almost entail that the value of one’s face is more important than the actual performance. Therefore I think this would only work with low-level content like commercials or supporting throwaway roles. Though, this wouldn’t be a very helpful tool for the community, it would just be another money-making tool for the already rich. What if you could see yourself surrounded by lots of celebrities, we are already modifying our body features. If we would replace our friend’s face with a resembling celebrity, would this make me become more admirable since I hang out with people of fame? Though it is actually illegal to use one’s face for your benefit, especially if I end up making money with the content I’m creating. So using the face of a celebrity is out of the picture but using your own could still be an option. What if I always wanted to go on a holiday to Bali but don’t have the money to do that, by using a tool like deepfake and someone’s holiday movie, I would be able to transform myself into that scene. This would mean I could somewhat create a new memory, especially if I would spread this on social media like the truth. This line of thought does lead to something interesting, doing something you have never done before or aren’t able to. This could help people manifest themselves, into something visible instead of only imagining. I think there are even many more uplifting examples deepfakes could play a part in, we need to be more creative and think outside the box when it comes to them.

Fig. 7 The Congress.

Technology

The term deepfake itself is an umbrella term for audiovisual manipulation off the face through deep learning algorithms. There are numerous programs compiled to make a deepfake yourself, these are usually GUI based, like Face Swap and DeepFace Lab by Iperov, but still require a great knowledge of programming, video editing, python and more. These deepfake programs use a GAN type network to constantly evaluate if the generated image resembles the desired image by constantly improving itself. In a GAN network two components are involved, the Generator which generates the images and the Discriminator which examines if the image is fake or not. There is a more deeply explanation of all the inner workings but I appreciate the simple analogy of the Teacher and the Student by Joey Mach, exponential technologies enthusiast and part of The Knowledge Society:

A more intuitive way of thinking of this is to imagine how the generator is analogous to the student, and the discriminator to the teacher. Remember the time when you wrote a test, and you were so stressed out about your results because you have no idea how well you’ve done. The student doesn’t know the mistakes they’ve made until the teachers mark their test and give them feedback. The students’ job is to learn from the feedback the teacher gives them to improve their test scores. The better the teacher is at teaching, the better the student’s academic performance gets. Theoretically, the better the generator is at creating almost flawlessly realistic images, the better the discriminator gets at differentiating real images from fake images. The same is true for the reverse, the better the discriminator is at image classification, the better the generator will be (Mach).

This analogy, which follows an old hierarchical form of teaching, is a good example of slave and master. These two are constantly improving their ability to collectively fake an image, creating something new, or rather rearranging pixels to better match the source. Deep-learning algorithms and artificial intelligence are becoming more common every day. It is implementing itself into our society like deepfakes into our communal video memory.

My YouTube recommendations contain a lot of new deepfakes, some of them are really bad and some of them work very well. Around the time of February of 2020 when the Covid-19 virus began to isolate people into their homes, I was in a pickle. I wanted to experiment with deepfakes myself but I didn’t have the understanding and the resources to begin. I asked some of the YouTubers for the favour of maybe creating a deepfake for me. Of course, in hindsight I now understand that something like that is way too much to ask, creating one takes a lot of time. So what else remained then to build a computer myself, the school was closed, nobody I knew had a computer capable to handle such huge computations. It became clear that you need a high tier Nvidia Graphic Processing Unit with at least 8Gb of Memory. So I built myself a 1700 € machine. I could finally start experimenting, after the initial driver installations and downloading, in my opinion, the best deepfake program —DeepFace Lab 2.0 or DFL—which was an easy install. Messing around with the program and trying to find good instructions, which against all odds —not really— is listed on one of the most well-known pornographic deepfake sites, MrDeepfakes. It was an unpleasant fact that if you would like to create a deepfake, you would be required to not only visit but also make an account to view additional technical information. After all, you only need a pretty decent computer and a lot of time to make a compelling deepfake.

AMD Ryzen 3700X Computer Processing Unit

BeQuite Dark Rock Pro Air Cooler

Asus Rog Strix 570e Motherboard

2X 16Gb G. skill RipJaws Memory Banks

Gigabyte Nvidia RTX 2070 Super 8Gb Winforce Graphics Processing Unit

850w Corsair Power Supply

500Gb Samsung SSD M2 Memory

Fig. 8 Building my Computer.

Deep Learning

Eventually, I took it upon myself to create my first deepfake. Instead of following the conventional route of transforming faces of celebrities, I opted for using my face as source material and used the renowned actor Michael Fassbender for the destination. Looking at a lot of deepfakes it is important to have a resembling face outline shape, the same forehead and colour hair. This would give the best result, from all the celebrities out there I kind of looked most like Fassbender. I filmed my face doing numerous expressions and read some texts. To give DFL enough material to work with. After extracting of around 5000 images from the video, the program attempted to learn my face. How I articulate, how I smile, the movement of the squeezing off my eyes, everything. This result, in the end, would extrapolate onto the destination which in this case was a clip of the film Macbeth by Kurzel. In this clip, Macbeth reflects on his kingdom and converses with his lady. I chose this clip because of the material, the size of the face in the frame and the number of frames to transform onto. This was a long – two weeks – process of experimentation and trial and error. Eventually, I concluded that the result was enticing enough to continue in post-production. This included further colour correction blending of the layers of faces and the applying of grain to better blend my face over the existing material. Overall I was very pleased with my first deepfake experiment. However there are some failures in this deepfake, I learned that when you create a face data set, you need to attain every expression with every eye position. During my face recording, I only watched at one point therefore DFL only learned my eye position in that direction. I learned a lot from creating deepfakes and I wanted to share the effect of seeing yourself with my fellow peers.

Fig. 9 Aligned Face Set.

Fig. 10 Training Preview.

Orignal movie, Michael Fassbender, Face Data_dst

Overlay Face, Learned by DeepFace Lab, Data_src

Masked Face, Merged by DeepFace Lab, Exported to Mp4

Final Composition, Editing in Davinci Resolve

Fig. 11 Face Merging Process.

I shared my video with some friends and asked if they too would like to see themselves in a scene or event. I have one close friend of mine that is a huge fan of Feyenoord and fully enjoys watching football. Though he is in a situation that he can’t walk. He had complications during birth which resulted in not being able to properly move and he has spasms too. Nonetheless, he really wanted to see himself play a game with his favourite club. Fortunately, friends of him always told him he looked like Michiel Kramer a player at Feyenoord. So I compiled a face data set and edited a short video of Kramer playing and scoring at Feyenoord. After extracting, learning and merging the faces I ended up with a rather compelling video. I edited some cheering sounds on top of the video along with the song you’ll never walk alone by Garry and the Pacemakers. I was nervous that he would be disappointed to watch and see himself walk and run, which he never could. Tim told me nevertheless he was prepared for that and was optimistic. His reaction was amazing, he thought it looked like him, his brother was there to watch it with him, and Tim kept telling his brother “ Kijk dan joh, dat lijkt toch echt dat ik dat ben!” You see, that looks like it’s really me! I asked him later what he thought of it and was really pleased with the results. Though he knows something like this could never happen, he still identified with the deepfake in the video. Following Tims deepfake, Fransje and Mariken both had a request to see themselves in a situation the would never venture into. I learned a lot from these experiments how to make the deepfakes less uncanny and what type of footage and narrative should you create. Overall it was a success but there are potential pitfalls, like with an experiment I did with Babeth. She is scared of big waves, but she wanted to see herself surfing them. Initially finding proper material was already hard, next finding a woman that resembles her body and hair was a difficult task too. I eventually found some content I edited out the impossible and failed parts of the deepfake and showed it to her. Her reaction wasn’t something like Tim’s, she was more trying to understand what she was observing. She told me she didn’t really found it resembling because of the hair colour and the face outline, though at one point she saw herself. She was kind of distancing herself from the deepfake and not feeling a connection with the video. This lead to a somewhat uncanny experience, it looked familiar but still not herself.

De voornaamste emotie is denk ik blijdschap men. Ik had geen idee dat dit kon. Het leek echt of dat ik het was. Bij het zien van het filmpje was ik vooral heel verbaasd. Ik vond het ook heel erg grappig om te zien. Waarschijnlijk omdat ik niet had verwacht dat het zo goed zou zijn. Ik snap ook dat mensen geëmotioneerd kunnen raken door dit soort filmpjes. Je kunt mensen dingen laten doen die ze eigenlijk niet kunnen. Bizar!

“Spanning”, “warmte”, “wow”, “avontuur” en “mogelijkheden”. Dit waren de eerste woorden die in mij opkwamen toen ik mijzelf op een enorm paard op een scherm voorbij zag komen. Het voelt een beetje als een déjà vu, je ziet jezelf maar je kan het niet helemaal plaatsen, want ja, dit is natuurlijk nooit echt gebeurd. Maar tegelijkertijd voelt het alsof dit weleens realiteit zou kunnen worden. Het opent de deuren naar mogelijkheden door in een veilige omgeving jezelf te zien in een uitdagende situatie. De angstprikkels verdwijnen en nieuwsgierigheid overwint. Wie weet….

Alleen de gedachte al een groep toe te moeten spreken, hoeft niet eens vanaf een podium te zijn doet me verstarren, erger nog … alleen het idee al dat ik in het openbaar te horen ben door een uitgezonden geluidsopname… het zweet breekt me uit.

Waarom? Deels gebrek aan zelfvertrouwen maar ook het gevoel dat ik nooit in staat ben om te zeggen wat ik zou willen zeggen. Dat ik vlot en begrijpelijk mijn verhaal kan doen. Een onmogelijke opgave vind ik dat. Er zijn altijd zwarte gaten, leegtes en dan ga ik om dat op te vullen los in mijn manier van denken die blijkbaar voor anderen over het algemeen vrij onbegrijpelijk blijkt.

Ik gaf dat altijd een 10 voor sprekersangst. Dat is in de loop der jaren iets afgenomen. Door mijn werk heb ik vele malen op het podium moeten staan of voor de groep. Maar het wil niet echt zakken… nu zit het op 8 of 9

En dan de Deep memories. Dat sprak me aan. Doe mij maar op een podium zei ik nog.

Eerste indruk, hoe goed volgt mijn gezicht wat er gebeurd. Zo’n pyama, ik zou hem zo dragen. Het haar, zou zo maar kunnen. Beweeglijke armen en handen met opgestroopte mouwen… dat ben ik! Precies!

Maar dan de stem, haar stem… die past me niet. En ze is veel drukker dan ik. En ik zie mijn zus.

Hoe vreemd is het om jezelf en je zus en de familietrekjes zo te zien. Onwerkelijk.

Anderen laten kijken, die zagen juist helemaal mij.

Paar dagen gewacht. En nu zonder geluid. Dat is beter, realistischer. Meerdere keren gekeken.

Ik word er toch echt zenuwachtig van, knoop in mijn buik. Al die mensen die kijken (naar mij?) en moeten lachen. Om mijn beweeglijke gezicht. Om een pyama die ik zou dragen, om de wapperende armen en handen die helemaal van mij zijn. Ik ben zo druk. Mijn ademhaling past zich aan aan wat ik zie.

Het zit in mijn hoofd, het komt steeds langs. Ik denk er nu al dagen over na en heb het er steeds over. Het doet wat maar ik weet nog niet wat. Ik ben nu door Corona niet in de gelegenheid mezelf te presenteren in een groep maar het zou zomaar makkelijker kunnen gaan.

Het gevoel is nu 7/8

Toen ik het filmpje zag kreeg ik meteen zin om het water in te gaan! Ook meteen een stukje zelfvertrouwen, van he dat ben ik. Iets wat me voorheen een onrealistisch doel leek, lijkt nu ineens tot een mogelijkheid te behoren! Ik voelde enthousiasme en zelfvertrouwen. Maar het is ook een gekke gewaarwording omdat ik ergens ook weet dat ik het niet echt ben. Het is wel een super goede motivator om weer te gaan surfen en beter te worden.

Fig. 12 Tim Reaction. [ Recommend to Watch ]

Fig. 13 Mariken Reaction.

Fig. 14 Fransje Reaction.

Fig. 15 Babeth Reaction.

All the original material is found on Youtube. In some countries it is not allowed to repurpose material for commercial use. Thus all the existing material I modified is for personal use and demonetised.

Training Preview, Tim

Training Preview, Mariken

Training Preview, Fransje

Training Preview, Babeth

Fig. 16 Training Preview Comparison.

Uncanny

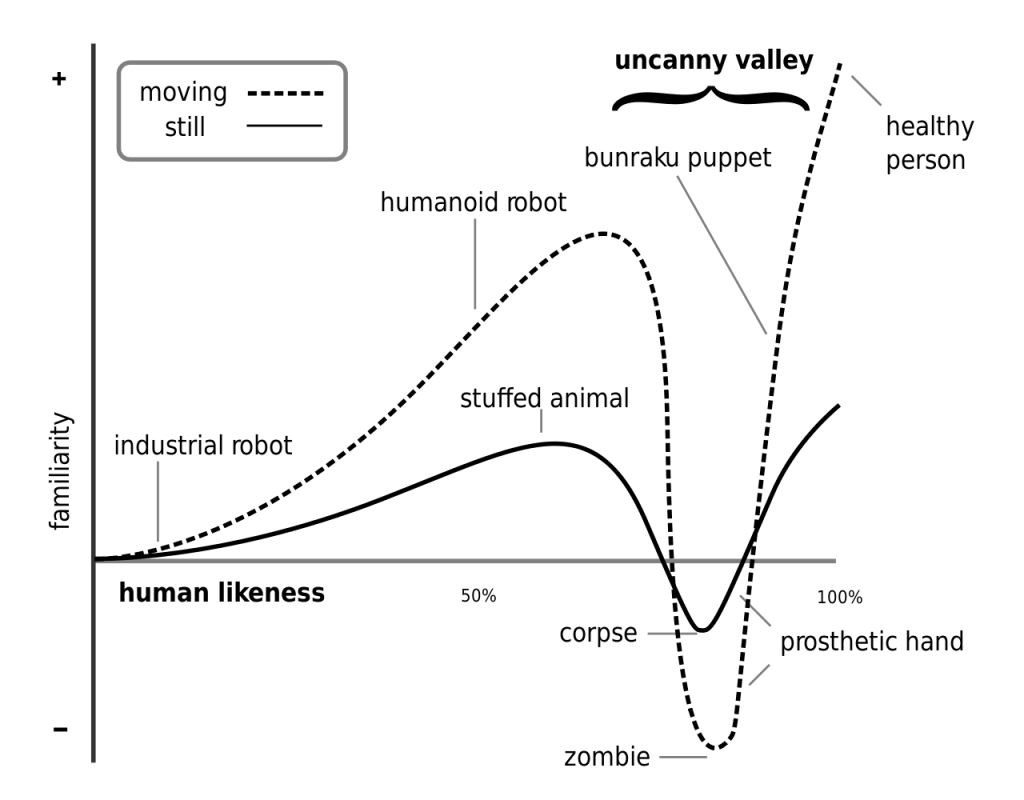

Sometimes when you’re watching a movie containing special effects, there is a probability that you could get this unsettling feeling that something is not right. I had this recently with the new teaser of Aladdin, the real-life remake of the original Walt Disney movie, something was off. Will Smith didn’t look like a magical genie and neither like a human, something oddly between those two. It’s the same with hyper-realistic human robots that are 99% like the real deal, but still very unsettling to look at. This feeling that something is wrong is described as uncanny, something strangely familiar but still unsettling to look at. Just make a robot look like a robot, Star Wars understood that early on. In the film industry, they use the graph of the uncanny valley to try to stay out of this dangerous zone of unsettling feelings. This graph was created by robotics professor Masahiro Mori. As a creator, you want to be either before or after the uncanny valley. When doubt arises the viewer is more focused on the uncanny experience than the actual video being shown, though the uncanny can also provide a good laugh. There are some examples where multi-million dollar companies try to create a visual effect but still don’t quite get it. For example, when Henry Cavill had a moustache during the reshoots of Justice League, Warner Bros., failed at removing the hairy upper lip. Not long later, a clip popped on YouTube showing Henry Cavill sporting a clean shave and the uncanny was nowhere to be found (DeepFakes Club). It appears a deepfaker took the responsibility to fix the unsettling scene with a free-to-use program and his 500 dollar home computer (Liszewski). For a visual effect to be perceived as real you need guarantee believability opposing doubt and that is exactly what this deepfake did.

Fig. 17 Still from Disney’s Aladdin – Special Look (55).

Fig. 18 Mori, The Uncanny Valley (99).

Fig. 19 Deepfakes Club, I Thaught an AI.

The uncanny isn’t something originated during the modern filmmaking era. Its importance has been coined by the philosopher Sigmund Freud in his essay Das Unheimliche from 1919, in which he said that the uncanny is not merely something weird or mysterious but rather something strangely familiar. It is the undoing of the natural when experienced you start to question your existence, it’s between the known and unknown. The feeling of uncanniness is the product of an expected probability but at a deeper, often sudden, perception. The road of expectation takes a hard turn towards the un-naturalness. It’s as if you are looking at a bystander’s watch to later realise he has a prosthetic arm, it catches you by surprise. And sometimes the lingering feeling of uncanniness could occur to you bit by bit, like looking at a face and slowly understanding that it is something totally not natural. Naturalness should be a part of something if it needs to be believable when something does not feel familiar doubt arises, thus one starts to question reality.

Unseen Self

After reviewing the short clip I made of myself playing a part in the movie Macbeth, I started to notice something with my observation and interpretation of the video. I noticed at some point that I was looking at myself, experiencing it as I was there but I know I wasn’t. Seeing myself saying those words, sitting in that room, conversing with her, it seemed like I did those things because it was my face. It became one of those memories you have lost in time but this video helped you get them back. Like my uncle send me that video when I was jumping off a boat. This observation was followed by an uncanny experience that happened when Macbeth puts his hand underneath the gown of the lady. I didn’t notice that moment when selecting this clip but now seeing it back together it took me by surprise. I never did anything like that, but here I am watching myself doing it, which made me uncomfortable. At the same time, I understood the great power of this tool. Observing yourself from a 3rd person perspective helped me to realise who I wasn’t, but who I could become. The possibility of seeing yourself perform in an action you haven’t done before could eventually be seen as an alternative identity, almost as if you are creating a new memory and aligning yourself to that.

Fig. 20 Macbeth, Deepfake experiment.

The act of perceiving yourself from a 3rd point perspective is common in this current day of age. When you post a video of yourself on Instagram, you are actively observing a past version of yourself on a screen. The possibility of observing a version of you that doesn’t exist never really occurs in life. However, when you have a dream and you see yourself taking part in that dream, thus from a 3rd perspective, could be somewhat comparable. Also when you are being recorded without your knowledge could somewhat apply to this phenomenon. Or when you look at yourself in the mirror but it is the first time in 20 years to see your reflection. Nonetheless, all these examples do not compare to the effect of the 3rd person deepfake observation. These previous examples are all in line with the knowledge of self-awareness. You are aware of the actions you took or are taking. It is maybe more relatable to memory loss and watching a recording of yourself before the event of losing your memory. Not actively aware of your previous actions, but still recognising that it is you, your identity. This could even be described as an uncanny memory. The recollection of identification outside one’s familiar knowledge or perceptions.

Autobiographical

Recalling the memory is a difficult process and relies on numerous systems to work together to untangle a memory caught in the net of personal fiction. We can look at memories stored as knowledge over time and are there to be recalled upon, to compare with the current self. Let’s say there is an event happening in your life, you receive your graduation diploma, this event is perceived through your sensory memory. When the inputs are encoded and processed it has transferred this event to your short term memory. When the event is important enough, you’ve added meaning to it, this event will be stored in your longterm memory, which you can recall from (Atkinson and Shiffrin in CrashCourse 2:49-4:35). This is an oversimplified and somewhat outdated workflow of memory creation but it leads to something more interesting. The autobiographical memory, which is used to access longterm memory events, like your graduation, that contains experiences from our personal life. Autobiographical memory is something like a personal narrative. It contains a vast dataset of your being, like your name, where you’ve been, your emotions and experienced events. It is a grounding definition in yourself, without your autobiographical memory you lose your sense of self, it provides an unbroken and consistent existence (Conway et al, McAdams in Fivush 574). We are defined by our memories but in return, we define which memories are recalled the easiest. Some individuals with severe cases of amnesia, communicate that they have lost their formers self, and are no longer themselves (Hirst in Fivush 574). This would mean that memories are a part of our identity and if altered, removed or forgotten, we change too.

The internal process of recollecting memories is like an editor that needs to cut a feature film in 2 seconds, you are bound to have mishaps and unrelated content in your memory movie. The Autobiographical memory is prone to alteration, that has been shown in studies where they have planted memories of almost drowning or being attacked by an animal, succeeding with about half of their test subjects (Loftus 10:26-11:45). This would suggest that we could create new memories that are not from our lives and identify with these memories if they belong to us. The field of psychology has been challenging and modifying unsupportive ways of behaviour and irrational manners of thought by using the process of cognitive-behaviour therapy. A method to battle negative memories. When a person is in a depressive state, negative recollections are faster than positives (Brewin 773). It is a retrieval competition of which one of the memories connected to a certain event or object. For a person to recollect a positive memory instead of a negative, one can use a form of imaginary rescripting. Which could be translated to the recreation or reconstruction of a memory connected to a damaging event. The imagined rescript should be memorable, positive and attention seizing while being prompted in the presence of the negative event. These rescripted imaginaries can even be fictional, less rational or even physically impossible. A process to follow could be to enrich the moment of negative imagery first, by calling these to mind, to then accordingly rescript these memories into a positive result (Arntz and Weertman, Hackmann in Brewin 777). Autobiographical memory is malleable like our own collective memory, when an event is rescripted we can embed a positive effect like we can enable fake news to become a part of our believed truth.

3rd Perspective

When one needs to recall an autobiographical memory it can usually be done in two perspectives. The 1st and 3rd person perspective, also known as field and observer (Sutin and Robins 1386). The 1st person perspective is seen through their own eyes, the 3rd person perspective is seen as an observation, you look at yourself. It’s comparable to video games where you are inside or behind the character. A large number of memories are recollected through the 1st person, but there are some instances when memory is reconstructed through the 3rd person. Such a memory is often connected to a stressful or emotional event that involves a focus on the self. This usually occurs when you are the centre of the memory event like in a presentation for a big audience (Nigro). It has been proposed that recalling a memory from the 3rd person is usually activated as a coping mechanism. In a study done by McIsaac and Eich, almost half of the patients recalled anxiety and traumas from a 3rd person perspective, It has been suggested that this serves as a cognitive strategy to better cope with negative memories (McIsaac and Eich in Sutin and Robins 1387). Thus seeing yourself from a 3rd person perspective, in an event which is usually stressful, can help you better assess the situation and evaluate what is going on. 3rd person self-talk is also used as a technique to distance yourself from the emotional weight of a situation, it can help you stay cool and take an objective observing perspective to not be so hard on yourself. It has been suggested that through the use of 3rd person narratives people feel less overwhelmed and acquire new knowledge and insights of the relived event (Schneiderman). While the 1st person perspective usually engulfs individuals with emotions, the 3rd person narrative gives an individual the ability to breathe and experience a situation from the sideline, able to form a better understanding of what is actually going on. Thus hopefully, ease into the process of changing ones negative events into an alternate outcome.

Fig. 21 1st Perspective.

Fig. 22 3rd Perspective.

Numerous methods have been designed to better overcome anxieties and negative events, with good outcomes. Like using the technique of virtual reality to battle PTSD (Anderson). Though these are patients that are willing and capable to rescript their memories, for them, it’s possible to take the 1st perspective and undergo the emotional load. When a person is not even able to take the first step of rescripting negative events, a 3rd objective perspective approach could be used. This is already done by using the technique of imaginary rescripting through cognitive-behaviour treatment. The collective research in this document could suggest that; visually perceiving oneself from a 3rd person perspective helps to emotionally distance themselves from a negative situation. While at the same time creating a new positive memory by using the medium of imagery like film.

Screens

Films have always been a big part of my life, they are too a 3rd person perspective on life itself. Film is like a tool for fiction creation, while at the same time our true collective memory is somewhat like fiction too, a subjective manifestation of how we see reality. The medium film is an experience package made by some of the brightest artists there are. They collect various emotional life lessons and pour them into a compressed session of approximately 2 hours. We consume film like no other species, last year in the United States alone the cinemas sold 1,239,254,735 tickets (The Numbers). So it is safe to say that we enjoy watching movies filled with sorrow, uncomfortable moments but also empowerment. When I go out and see a movie I’m looking for stories that make me feel alive, It helps to understand myself better. I think that people need to experience more, like Steve Martin says “You know what your problem is, it’s that you haven’t seen enough movies – all of life’s riddles are answered in the movies” (Roth and Aberson 59). I learned a lot from movies and used many lessons in my own narrative. Yes Man, Star Wars and Mr Nobody, just a few examples of some movies that changed me. It’s special how emotional experiences are transported from the screen into the mind and used to empower our life. When one watches a movie a person could place themselves in the role of a character, though, there is a distinction between the actor and yourself. Your face doesn’t mirror that of the actor. This is where deepfakes could play a major part in.

Conclusion

I think it’s important to create a better future for our society and the wellbeing of its residents. The conception of fake memories and the rapidly evolving digital manipulation tools is that they are making this world a worse place to be in. This is not following my beliefs, I think we need to recognize the positive potential of these tools and use them for personal empowerment. I would suggest that the effect of seeing yourself from a 3rd person perspective without self-induced imagination, could give the emotional space and be an impactful rescripting tool for changing a negative memory identification. This could be done by using a dystopian tool and transforming its negative imagery into a monumental memory maker. Deepfakes have a bad reputation but have been misinterpreted for their potential, they can create a truly unseen 3rd person perspective of oneself, therefore granting the ability to objectively observe what is happening. When a video, containing a stressful event is shown in combination with cognitive rescripting under the supervision of a psychologist, one could create new positive memories that take the place of negative events. Just like our images are prone to modification and in consequence a change in representation, our autobiographical narrative is malleable through the exploitation of deep memories. I want to create short videos about personal negative events, objects or situation. In these videos, a person will see themselves interacting with these triggers. This will be made possible by making a source set of their face. And through the use of deepfakes transform their faces unto a stand-in actors face. This will give a person the possibility to see themselves in a 3rd person perspective and can observe themselves objectively. My role will be the editor of these deep memories. This project is still in its infancy but through time will be researched and experimented upon. I will take the first steps to better develop this tool and will opt for funding to support a proper study. Now it could be seen just like a video manipulation tool, but with further development and by bringing together multiple disciplines, like the field of psychology and filmmaking, the creation of deep memories could become a valuable tool for people in need of change.

I would like to express my deepest appreciation to my research supervisor Dieuwke Boersma for her persistent support and direction. Further more, I would like to greatly thank all the participants, Tim van Zutphen, Babeth van Winsen, Mariken van Gulpen and Fransje Barnard for their faith and providing their face data. I want to show my gratitude to Josue Amador and Isaac Monté for their guidance and their abilty to inspire. And thank you to all that supported me.

Ajder, Henry, et al. The State Of Deepfakes: Landscape, Threats and Impact. Deeptrace, 2019, enough.org/objects/Deeptrace-the-State-of-Deepfakes-2019.pdf.

Anderson, Page, et al. ‘Virtual Reality Exposure in the Treatment of Social Anxiety’. Cognitive and Behavioral Practice, vol. 10, no. 3, 2003, pp. 240–47. Crossref, doi:10.1016/s1077-7229(03)80036-6.

Arntz, Arnoud, and Anoek Weertman. ‘Treatment of Childhood Memories: Theory and Practice’. Behaviour Research and Therapy, vol. 37, no. 8, 1999, pp. 715–40. Crossref, doi:10.1016/s0005-7967(98)00173-9.

Atkinson, R. C., and R. M. Shiffrin. ‘Human Memory: A Proposed System and Its Control Processes’. Psychology of Learning and Motivation, 1968, pp. 89–195. Crossref, doi:10.1016/s0079-7421(08)60422-3.

Bad Lip Reading. ‘“STATE OF THE UNION 2020” ’. YouTube, uploaded by Bad Lip Reading, 27 Feb. 2020, www.youtube.com/watch?v=VOyHF_OUuNE.

Bergland, Christopher. ‘Silent Third Person Self-Talk Facilitates Emotion Regulation.’ Psychology Today, Sussex Publishers, 28 July 2017, www.psychologytoday.com/us/blog/the-athletes-way/201707/silent-third-person-self-talk-facilitates-emotion-regulation.

Blade Runner 2049. Directed by Denis Villeneuve, performances by Ryan Gosling and Carla Juri, Sony Pictures, 2017.

Brewin, Chris R. ‘Understanding Cognitive Behaviour Therapy: A Retrieval Competition Account’. Behaviour Research and Therapy, vol. 44, no. 6, 2006, pp. 765–84. Crossref, doi:10.1016/j.brat.2006.02.005.

Brown, Nina Iacono. ‘Congress Wants to Solve Deepfakes by 2020’. Slate Magazine, 15 July 2019, www.slate.com/technology/2019/07/congress-deepfake-regulation-230-2020.html.

Buchler, Pavel. Ghost Stories: Stray Thoughts on Photography and Film. Proboscis, 1999.

Conway, Martin A., et al. ‘The Self and Autobiographical Memory: Correspondence and Coherence’. Social Cognition, vol. 22, no. 5, 2004, pp. 491–529. Crossref, doi:10.1521/soco.22.5.491.50768.

Ctrl Shift Face. ‘Bill Hader Impersonates Arnold Schwarzenegger [DeepFake]’. YouTube, uploaded by Ctrl Shift Face, 10 May 2019, www.youtube.com/watch?v=bPhUhypV27w.

CrashCourse. ‘How We Make Memories: Crash Course Psychology #13’. YouTube, uploaded by CrashCourse, 5 May 2014, www.youtube.com/watch?v=bSycdIx-C48.

DeepFakes Club. ‘I Taught an AI to Shave Henry Cavill’s Mustache’. YouTube, uploaded by DeepFakes Club, 6 Feb. 2018, www.youtube.com/watch?time_continue=2&v=2PZ3W1W20bk&feature=emb_logo.

Fivush, Robyn. ‘The Development of Autobiographical Memory’. Annual Review of Psychology, vol. 62, no. 1, 2011, pp. 559–82. Crossref, doi:10.1146/annurev.psych.121208.131702.

Freud, Sigmund, et al. The Uncanny. Amsterdam-Netherlands, Netherlands, Adfo Books, 2003.

Hackmann, Ann. ‘Working with Images in Clinical Psychology’. Comprehensive Clinical Psychology, 1998, pp. 301–18. Crossref, doi:10.1016/b0080-4270(73)00196-6.

Harari, Yuval Noah. Sapiens. Translated by John Purcell and Haim Watzman, London, Vintage, 2015.

Iperov. ‘Iperov/DeepFaceLab’. GitHub, github.com/iperov/DeepFaceLab. Accessed 19 Apr. 2020.

Liszewski, Andrew. ‘A $500 PC and an AI Did a Way Better Job Erasing Henry Cavill’s Justice League Mustache Than Expensive VFX’. Io9, 7 Feb. 2018, io9.gizmodo.com/a-500-pc-and-an-ai-did-a-way-better-job-erasing-henry-1822797682.

Loftus, Elizabeth. ‘How Reliable Is Your Memory?’ TED Talks, uploaded by TEDGlobal, 1 June 2013, www.ted.com/talks/elizabeth_loftus_how_reliable_is_your_memory.

Macbeth. Directed by Justin Kurzel, performances by Michael Fassbender and Marion Cotillard, Studio Canal, 2015.

Mach, Joey. ‘The Ugly, and The Good.’ Towards Data Science, 2 Dec. 2019, towardsdatascience.com/deepfakes-the-ugly-and-the-good-49115643d8dd.

McAdams, Dan P. ‘Unity and Purpose in Human Lives: The Emergence of Identity as a Life Story’. Studying Persons and Lives, edited by A.I. Rabin et al., 1990, pp. 148–200, psycnet.apa.org/record/1990-97218-004.

McIsaac, H. K., and E. Eich. ‘Vantage Point in Traumatic Memory’. Psychological Science, vol. 15, no. 4, 2004, pp. 248–53. Crossref, doi:10.1111/j.0956-7976.2004.00660.x.

Mori, Masahiro, et al. ‘The Uncanny Valley [From the Field]’. IEEE Robotics & Automation Magazine, vol. 19, no. 2, 2012, pp. 98–100. Crossref, doi:10.1109/mra.2012.2192811.

Ratner, Paul. ‘How a Wild Theory About Nelson Mandela Proves the Existence of Parallel Universes’. Big Think, 30 Jan. 2019, bigthink.com/paul-ratner/how-the-mandela-effect-phenomenon-explains-the-existence-of-alternate-realities.

Roth, Eric, and Toni Aberson. Compelling Conversations: Questions and Quotations on Timeless Topics. Amsterdam-Netherlands, Netherlands, Amsterdam University Press, 2010.

Schneiderman, Kim. ‘Fooling Your Ego.’ Psychology Today, Sussex Publishers, 15 June 2015, www.psychologytoday.com/us/blog/the-novel-perspective/201506/fooling-your-ego.

Schwartz, Joan M. ‘Records of Simple Truth and Precision’. Photography, Archives, and the Illusion of Control, Articles, 2000, pp. 1–40. Archivaria, archivaria.ca/index.php/archivaria/article/view/12763/13951.

Scott, Daniella. ‘Deepfake Porn Nearly Ruined My Life’. ELLE, 6 Feb. 2020, www.elle.com/uk/life-and-culture/a30748079/deepfake-porn.

Sontag, Susan. ‘In Plato’s Cave’. On Photography, Picador, 1977, pp. 3–24, sites.uci.edu/01807w14/files/2014/02/SontagSusan_InPlatosCave.pdf.

Sutin, Angelina R., and Richard W. Robins. ‘When the “I” Looks at the “Me”: Autobiographical Memory, Visual Perspective, and the Self’. Consciousness and Cognition, vol. 17, no. 4, 2008, pp. 1386–97. Crossref, doi:10.1016/j.concog.2008.09.001.

The Congress. Directed by Ari Folman, performed by Robin Wright, Drafthouse Films, 2013.

‘The Numbers – Movie Market Summary 1995 to 2020’. The Numbers, www.the-numbers.com/market. Accessed 20 May 2020.

tutsmybarreh. ‘[GUIDE] – DeepFaceLab 2.0 EXPLAINED AND TUTORIALS (Recommended)’. Mr DeepFakes, 5 Feb. 2020, mrdeepfakes.com/forums/thread-guide-deepfacelab-2-0-explained-and-tutorials-recommended. Accessed 3 June 2020.

Walt Disney Studios. ‘Disney’s Aladdin – Special Look: In Theaters May 24’. YouTube, uploaded by Walt Disney Studios, 11 Feb. 2019, www.youtube.com/watch?v=7hHECMVOq7g.

Fig. 1 Header. Created by Yori Ettema, 20 June 2020.

Fig. 2 Zeilen. Filmed by René Ettema, July 2003.

Fig. 3 Pixels. Created by Yori Ettema, 10 June 2020.

Fig. 4 Blade Runner 2049. Directed by Denis Villeneuve, performances by Ryan Gosling and Carla Juri, Sony Pictures, 2017.

Fig. 5 Bad Lip Reading. ‘“STATE OF THE UNION 2020” ’. YouTube, uploaded by Bad Lip Reading, 27 Feb. 2020, www.youtube.com/watch?v=VOyHF_OUuNE.

Fig. 6 Ctrl Shift Face. ‘Bill Hader Impersonates Arnold Schwarzenegger [DeepFake]’. YouTube, uploaded by Ctrl Shift Face, 10 May 2019, www.youtube.com/watch?v=bPhUhypV27w.

Fig. 7 The Congress. Directed by Ari Folman, performed by Robin Wright, Drafthouse Films, 2013.

Fig. 8 Yori Ettema. ‘Building my Computer’. YouTube, uploaded by Yori Ettema, 17 June 2020, https://www.youtube.com/watch?v=hnD8NONv7ZQ&.

Fig. 9 Aligned Face Set. Created by Yori Ettema, 10 June 2020.

Fig. 10 Training Preview. Created by Yori Ettema, 13 June 2020.

Fig. 11 Face Merging Process. Created by Yori Ettema, 12 June 2020.

Fig. 12 Yori Ettema. ‘Tim Reaction, Monumental Memories’. YouTube, uploaded by Yori Ettema, 17 June 2020, https://www.youtube.com/watch?v=0E1oWcEd_cE.

Fig. 13 Yori Ettema. ‘Mariken Reaction, DeepMemories’. YouTube, uploaded by Yori Ettema, 19 June 2020, https://www.youtube.com/watch?v=RcKi76wfOcM.

Fig. 14 Yori Ettema. ‘Fransje Reaction, DeepMemories’. YouTube, uploaded by Yori Ettema, 19 June 2020, https://www.youtube.com/watch?v=JaAlXOP93ss.

Fig. 15 Yori Ettema. ‘Babeth Reaction, DeepMemories’. YouTube, uploaded by Yori Ettema, 17 June 2020, https://www.youtube.com/watch?v=WeNO1ZBWGLY&.

Fig. 16 Training Preview Comparison. Created by Yori Ettema, 15 June 2020.

Fig. 17 Walt Disney Studios. ‘Disney’s Aladdin – Special Look: In Theaters May 24’. YouTube, uploaded by Walt Disney Studios, 11 Feb. 2019, www.youtube.com/watch?v=7hHECMVOq7g.

Fig. 18 Mori, Masahiro, et al. ‘The Uncanny Valley [From the Field]’. IEEE Robotics & Automation Magazine, vol. 19, no. 2, 2012, pp. 98–100. Crossref, doi:10.1109/mra.2012.2192811.

Fig. 19 DeepFakes Club. ‘I Taught an AI to Shave Henry Cavill’s Mustache’. YouTube, uploaded by DeepFakes Club, 6 Feb. 2018, www.youtube.com/watch?time_continue=2&v=2PZ3W1W20bk&feature=emb_logo.

Fig. 20 Yori Ettema. ‘Macbeth, Deepfake Experiment’. YouTube, uploaded by Yori Ettema, 17 June 2020, https://www.youtube.com/watch?v=aVpnZd7E2-E&.

Fig. 21 1st Perspective. Created by Yori Ettema, 20 June 2020.

Fig. 22 3rd Perspective. Created by Yori Ettema, 20 June 2020.